Evolution of GenAI Search & Chatbots

The Retrieval Augmented Generation (RAG) approach underpins how the GenAI search engine works. The RAG approach uses vector embeddings and semantic search to narrow down the content scope and generate a response using third-party APIs. The RAG approach is simple and elegant. The need for a conversational interface leads to many companies adopting open-source frameworks such as LangChain. Many chatbots are powered using LangChain. MongoDB also open-sourced its approach. Both GenAI-powered search engines and chatbots are gaining a lot of traction amongst the new wave of customers who are tech-savvy and want to get things done quicker! benefits of these tools are multi-fold such as

- Helps to learn better through iterative probing

- Assists in producing accurate answers to customer questions

- Feels natural to have a conversation

As customer are becoming more tech-savvy and pressed for time, they are always looking to resolve their queries themselves. The enterprise has to empower those customers with the right tools such as a chatbot to self-serve. GenAI search & Chatbots are predominantly used by enterprise organizations in deflecting support tickets. Many enterprises are finding success in increased customer satisfaction scores after the introduction of chatbots inside their products and information portals. Having an in-app experience for resolving customer queries offers a rich search experience. A chatbot gives customers a good knowledge experience and stimulates learning.

Beyond AI LLM Agents Will Shape the Future

The LLM agents are poised to become the next big thing. The LLMs are powering these agents to be more intelligent and able to take action. These agents may be tasked to involve human beings before making decisions thus human-in-the-loop principles should be applied while designing these systems. The future is exciting given the rise of the LLM agents who are programmed to perform tasks, make decisions, and communicate with other LLM agents for information exchange. These LLMs can leverage the power of collaborative intelligence and undertake sophisticated tasks more quickly and easily.

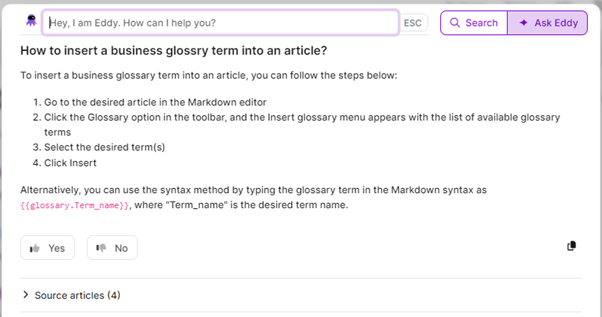

Imagine asking a GenAI-powered search engine a “How-to” procedure. For example, in docs.document360.com, you can ask Eddy AI, “How to insert a business glossary term into an article”

Eddy AI produces four steps that need to be undertaken to accomplish this task. These steps are sequential, and they may/may not need human input to continue. If a GenAI-based search can produce a sequence of steps to undertake a task, an LLM agent can understand those steps and execute them inside the product. This can be accomplished in two ways

-

Traditional UI automation

The UI actions can be automated using traditional technology such as Robotic Process Automation (RPA). These LLM agents can be a lot more versatile in terms of undertaking UI automation and executing the steps as instructed by another GenAI-based agent. This process involves identifying different UI elements and the ability to understand what actions those elements can undertake. Tools such as AskUI can automate UI interactions based on instructions!

- Utilizing function calling via APIs

Depending on customer requirements, you may want to accomplish a task using different APIs under the hood. Function calling enables you to call different APIs depending on the scenario

The LLM agents can undertake tasks based on

- Assigned roles and responsibilities

- Behavior it must adhere to

- What inputs can it accept and what does not

- What is the expected outcome of its task

- Whether any need for human intervention

The processes can be done in three ways

This is suitable for tasks where the orderly progression of steps matters for accomplishing a particular task. In a knowledge base, this is ideal for executing a “How-to” procedure

This is suitable in a managerial hierarchy where tasks are delegated to other LLM agents and executed based on certain commands. This favors more asynchronous execution of the steps to accomplish a particular task

This is suitable for tasks where collaborative decision-making is involved. This is ideal for any tasks that involve decision-making using various inputs from other LLM agents’ outputs